Emotional Artificial Intelligence EAI is a branch of artificial intelligence that deals with the “emotional” aspects of the human being, able to understand them and, if necessary, emulate and help them. The term dates back to 1995 when researcher Rosalind Picard published “ Affective Computing”.

There are different types of EAI:

- Word (text)

- Tone of voice

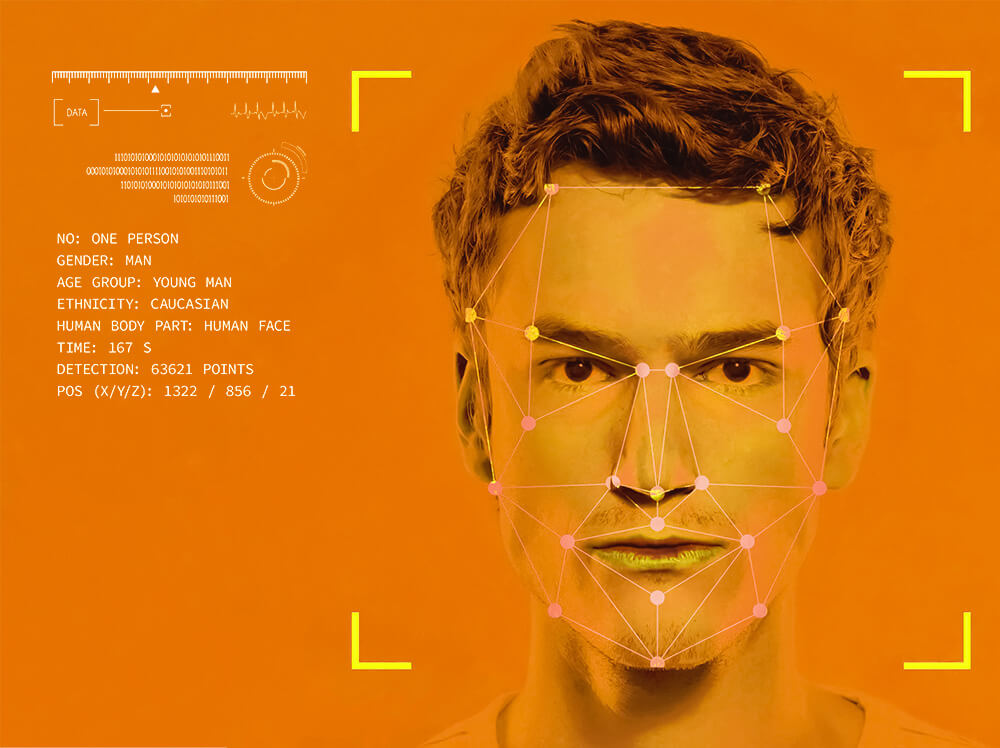

- Facial expressions

- Body language

- Multimodal

For example, if a person addresses a voice assistant in an angry or frustrated tone, the voice assistant can detect these emotions and adapt its response appropriately. Similarly, a robot can use EAI to interpret the facial expressions of the people it interacts with and adapt its behaviour accordingly.

Since then many studies have been done in this sector to try to interpret and understand the emotions of the human being, from facial expressions, to the interpretation of gestures, to the tone of voice up to the choices in purchases while browsing the web.

In November 2022, Forbes published an article indicating that EAI is the future of digital health.

One of the examples of EAI application is the LUCID project where the therapeutic effects of music are demonstrated by creating digital therapies to improve human health, therapies accessible to all. Empathy is a complex concept full of meanings sometimes with unknown implications, with the aim of understanding the emotional states of human beings.

In theory, if machines can have this level of understanding, they can best help us by boosting performance in healthcare and personal care.

Science journalist Stephen Gosset, in his article, Affective computing, describes the advantages and disadvantages of EAI.

Among these emerges the ability to detect the needs of the individual with good precision, consequently providing targeted products and services, optimizing times and costs.

Among the disadvantages, in addition to the privacy issue, there is the possibility of making decisions on erroneous interpretations derived from inevitable cognitive biases and biases both of the algorithm programmer and intrinsic in human learning.

Again this article offers us four important ideas to understand EAI, expressed by as many four competent characters in the field: Seth Grimes, founder of Alta Plana and natural language processing consultant; Ranal Gujral, CEO of Behaviour Signals; Skyler Place, Cogito’s chief behavioural science officer; and Daniel McDuff, former principal investigator of Microsoft AI.

Another concern about the use of EAI is that some people fear that the technology can be used to manipulate people’s emotions.

Journalist Pragya Agarwal has published an article on WIRED where she explains how according to her, EAI is not and will never be a substitute for empathy and that this is and will always remain the exclusive prerogative of human beings.

2023 will be the year when EAI will become one of the dominant applications of machine learning.

According to the journalist

For example, Hume AI , founded by former Google researcher Alan Cowen, is developing tools to measure emotion from verbal, facial, and vocal expressions. Swedish company Smart Eyes recently acquired Affectiva, the MIT Media Lab spinoff that developed the SoundNet neural network, an algorithm that classifies emotions like anger from audio samples in less than 1.2 seconds.

Video platform Zoom is also introducing Zoom IQ, a feature that will soon provide users with real-time analysis of emotion and engagement during a virtual meeting.

We now live in the time where emotional artificial intelligence has also become common in schools. In Hong Kong, some secondary schools are already using an artificial intelligence program, developed by Find Solutions AI, which measures the micro-movements of muscles on students’ faces and identifies a range of negative and positive emotions.

Teachers use this system to monitor students‘ emotional changes, as well as their motivation and concentration, allowing them to take early action if a student is losing interest.

Cem Dilmegani in his article describes the Emotion Detection and Recognition (EDR) market as a market worth over 35 billion dollars with an annual growth of 17% until 2030.

He also reports the projects of 10 companies that operate successfully in various fields ranging from marketing, travel agencies, personal assistance, education, gaming, driving assistance, disaster and emergency forecasting and many others as described in another article by the same author.

Analyse human attentive and affective states

Credits: Emotiva

Professor Peter Mantello and psychologist Manh Tung Ho , describe in their article, which I recommend reading in its entirety, because we must be afraid of EAI. The authors identify 5 main tensions in the diffusion of EAI in the social fabric.

The first tension is the fact that the technology relies on invisible data tracking, which can lead to improper, unethical or malicious use.

The second concerns the cultural tensions that arise from the fact that these emotional AI technologies cross national and cultural borders. While emotion sensing technologies are predominantly designed in the West, they are sold to a global market.

The problem is that while these devices cross international borders, their algorithms are rarely modified to account for racial, cultural, ethnic or gender differences false positive identifications, with a negative impact on the target individual.

Thirdly, the lack of industry standards, such as the hidden data-gathering activities of many smart technologies, developed as a proprietary layer in many products will make its collective regulation very difficult. A striking example is the automotive industry.

Fourth, existing ethical frameworks for emotional AI are often vague and inflexible. This is because different companies, in different cultural backgrounds, have different rationales or goals for adopting new technology.

Finally, comes the shaky science of the emotion recognition industry. A growing number of critics argue how it is possible that emotions can be made computable.

As I often like to remind you, a technology cannot be judged good or bad but it is always how it is used and the purpose of its application that can be accepted or rejected by the single human being.

In conclusion, a question arises spontaneously.

But if man created robots to use them in repetitive jobs and in those processes where it is appropriate to make decisions based on statistical data preferable to emotional choices

What if robots or algorithms started making decisions based not on data but about emotions?

They would probably cease to be useful as robots, and humans would have to create another generation of purely computational robots.

0 Comments